Socially Trustable Drones: Human Centered Robotic System Design

This research is focused on modeling human’s perception of safety in close proximity of flying co-robots. Fast and automated framework is created to generate these models. It is achieved by building a virtual reality (VR) testbed, where humans are tested for their reaction to flying co-robots. A biometric data acquisition system is used to measure human’s skin conductance, heart rate and head tilt, which are used as data to learn the human’s perception of safety as a function of the robot’s movement. The experimental framework provides precise measurement of the subject’s stimuli from the VR environment and time-stamped acquisition of skin conductance and heart rate. The following video shows more details about the VR simulator we built for this research.

[youtube]https://www.youtube.com/watch?v=gRN_dfu94dY[/youtube]

The following video shows more details about the experiments.

[youtube]https://youtu.be/SIrwms86Cp8[/youtube]

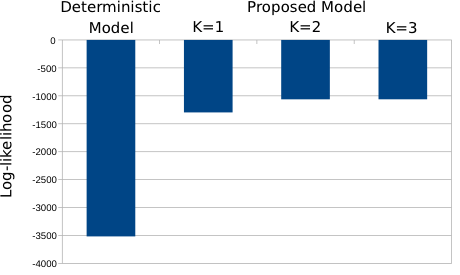

Virtual reality (VR) is used as a safe testing environment for psychological data collection from the participants experiencing a flying robot in their vicinity. The challenge in the data collection process is due to the distraction by other factors when human’s attention is focused not only on the robot but also on other stimuli. This leads to irrelevant data samples, for which the arousal measurement is unrelated to the main objective of the experiment. Applying standard regression methods without considering the unknown factor results in an incorrect model. To overcome this issue, the present approach models the change of the focus in human’s attention as a latent discrete random variable, which clusters the data samples into two groups of relevant and irrelevant samples. The algorithm improves log-likelihood over the standard least squares solution as shown in Fig 1.

Fig 1. Log-Likelihood with Test Data. Deterministic model refers to a standard regression which minimize the squared error.

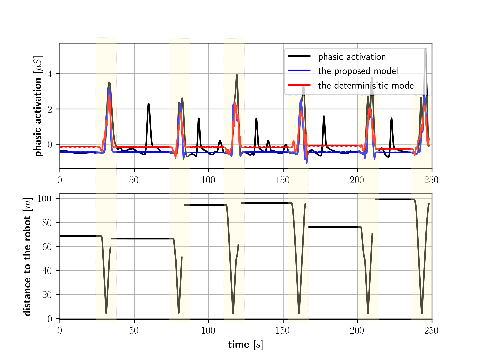

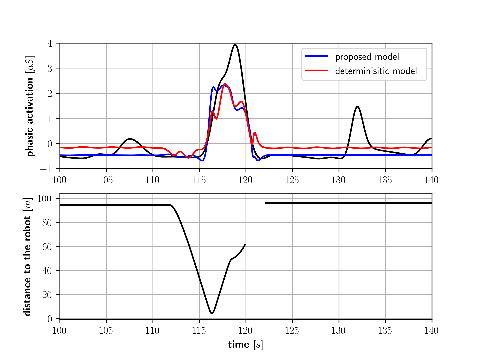

Fig 2. Prediction of the arousal as function of the robot’s movement (position, velocity).

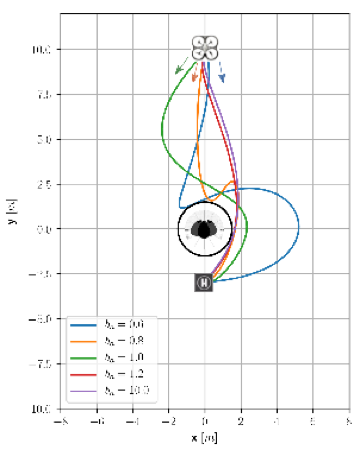

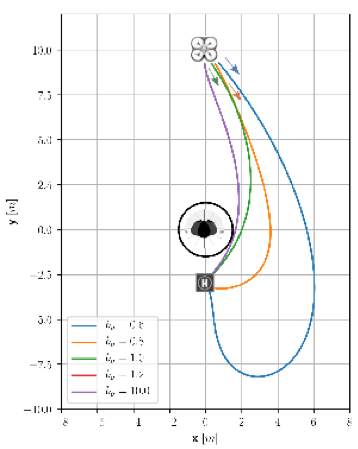

Optimal paths generated using the proposed model result in reasonable safety distance from the human. In contrast, the paths generated with the standard regression model have undesirable shapes due to over-fitting as shown in Fig 3.

Fig 3. Flight Path generated using the arousal model.

This video gives a brief overview of this research project:

[youtube]https://www.youtube.com/watch?v=sjRNY07FKTg[/youtube]

Publications and talks:

- H.-J. Yoon and N. Hovakimyan, “VR Environment for the Study of Collocated Interaction between Small UAVs and Humans”, AUA Data Science Workshop, Yerevan, Armenia, May 2017

- H.-J. Yoon and C. Widdowson, “Regression of Human Physiological Arousal Induced by Flying Robots Using Deep Recurrent Neural Networks,” Coordinated Science Lab Student Conference 2017, University of Illinois at Urbana-Champaign

- T. Marinho, A. Lakshmanan, V. Cichella, C. Widdowson, H. Cui, R. M. Jones, B. Sebastian, and C. Goudeseune, “VR Study of Human-Multicopter Interaction in a Residential Setting,” IEEE Virtual Reality (VR), March 2016, pp. 331–331.

- T. Marinho, C. Widdowson, A. Oetting, A. Lakshmanan, H. Cui, N. Hovakimyan, R. F. Wang, A. Kirlik, A. Laviers, and D. Stipanovic, “Carebots: Prolonged Elderly Independence Using Small Mobile Robots,” Mechanical Engineering, vol. 138, no. 9, pp. S8–S13, 09 2016.

- H.-J. Yoon, T. Marinho, and N. Hovakimyan, “A Path Planning Framework for a Flying Robot in Close Proximity of Humans,” IEEE/RSJ International Conference on Intelligent Robots and Systems, Madrid, Spain, October 2018 (Submitted)